I have a client whose website I’ve been managing for over a decade.

Long before I was working with them, the client registered their domain with a company called NameSecure. As you can see by clicking that link, their website still exists, but they were long ago purchased by Network Solutions (one of the worst companies in the world). NameSecure’s zombie, circa 2002 website lives on though. I can still log into the client’s account. I’ll come back to that in a minute.

When I started working with the client, my preferred hosting company was Media Temple. As you can see by clicking that link, their website doesn’t exist anymore, after they were acquired and dismantled several years ago by GoDaddy. The company whose name, I still believe, was supposed to be “God Addy,” as in “the god of addresses,” when some nascent techbro registered it in 1999, before marketing stepped in and retconned it to the slightly less awful name that the world has known ever since they kicked off their sexist, years-long Super Bowl ad campaign of the early 2000s. (And yes, I’ve read the contrary history on Wikipedia.)

GoDaddy mostly absorbed everything Media Temple-related, and the client set me up with “Delegate Access” so I can get into their GoDaddy account. That’s where we made our DNS changes a few years ago to point to the Digital Ocean server I was running their site on until Digital Ocean became a disaster. And it’s where I went again last year when I moved their site to WP Engine. (Don’t even get me started.)

Well they’ve had it with WP Engine’s ludicrous overage charges (and so have I), so now we’re moving on to the hosting-company-that-only-mostly-sucks-rather-than-completely-sucks du jour, Hostinger. So far the worst I can say about Hostinger is that sometimes their web control panel just stops responding. But I blame React (and therefore, indirectly, Facebook/Meta) for that.

I’ve got their site ready to go on Hostinger, so now it’s just down to the DNS update.

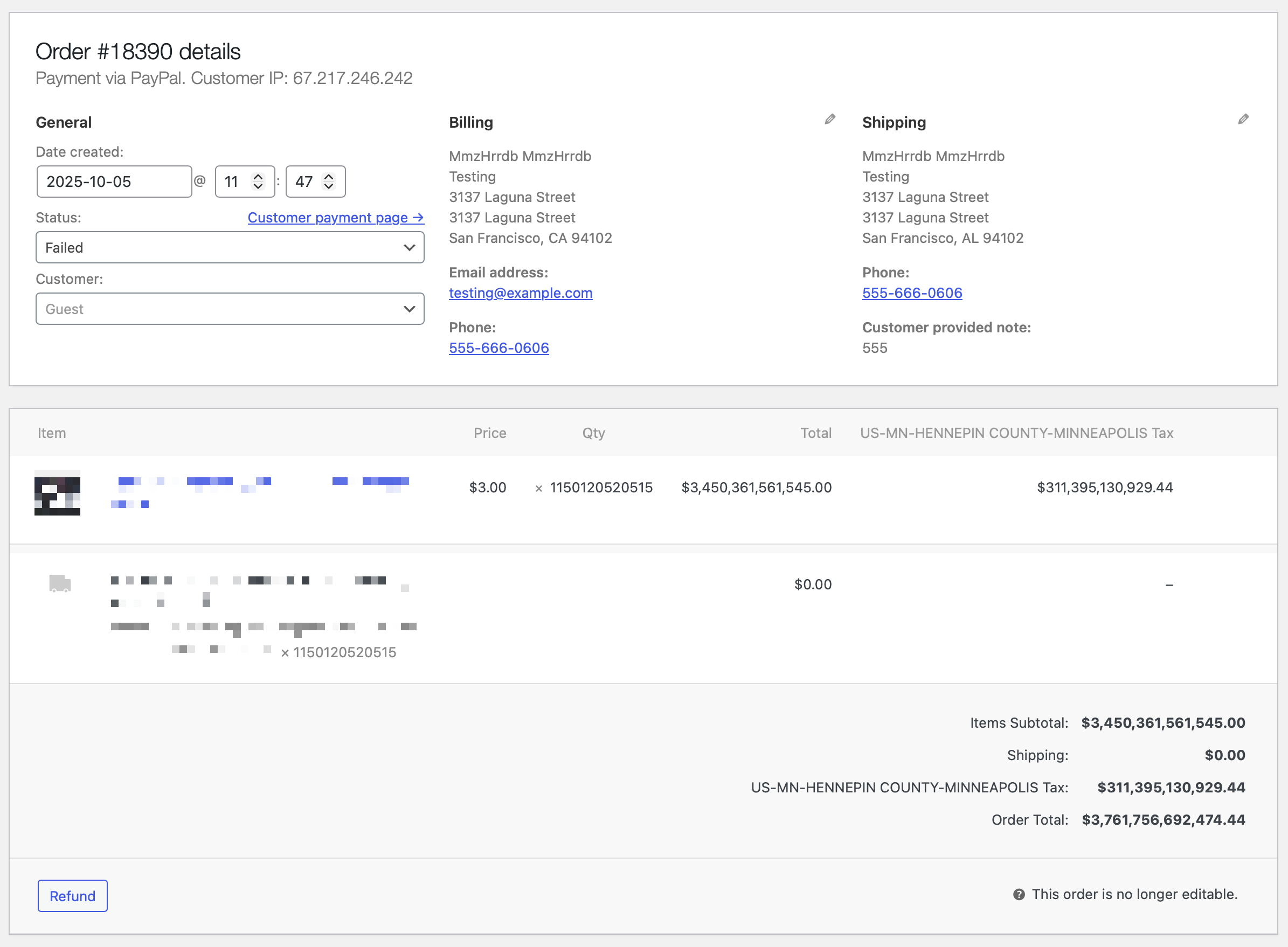

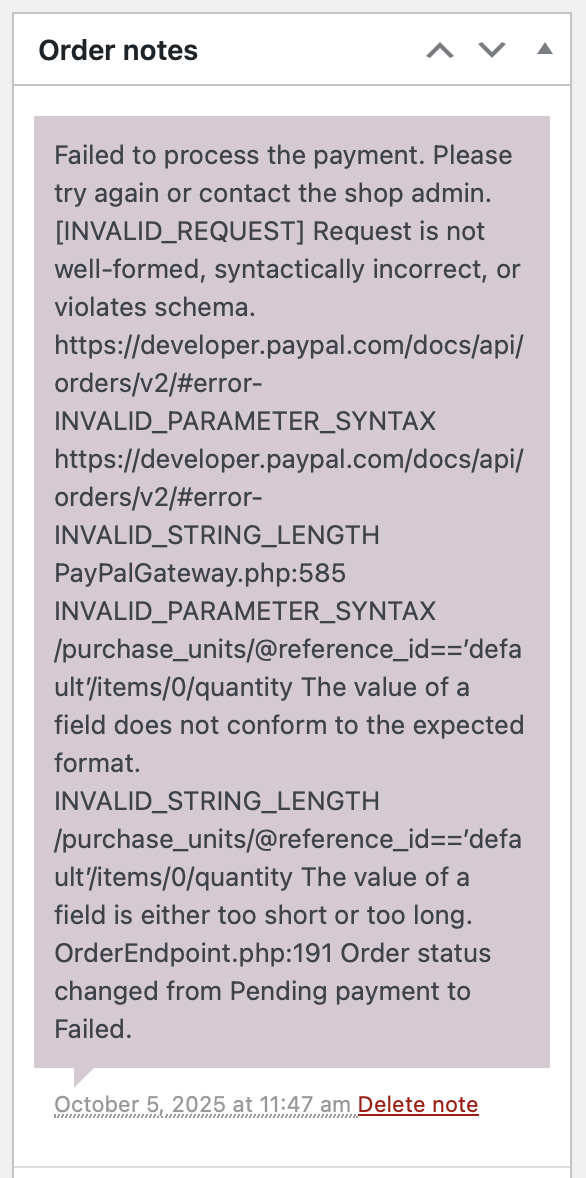

I started by logging into GoDaddy, and was surprised to see there are no domains listed for their account. Then I pieced together what must have happened. We had canceled their Media Temple hosting several years ago, but there was still a (no longer necessary) annual SSL certificate auto-renewal that kept happening. The client finally reached out to me in time to stop the auto-renewal this year, so we canceled it, effectively ending all of the client’s paid services with GoDaddy. But their domain continued to use the Media Temple name servers.

I’m just guessing here, but it seems that canceling the last paid GoDaddy service also shut off access to the domain management tools. So, their undead DNS zone file endures on Media Temple’s zombie name servers, but with no way to make any edits.

No worries, I thought. Let’s just jump back to NameSecure. It’s ancient, but it is where their domain is actually registered, and they do let you set your domain to use their own name servers. (I always prefer to keep name servers at the registrar, whenever possible, anyway.)

But… oh dear. NameSecure’s zone file editor only lets you create A, MX, and CNAME records. No TXT records. Seriously… no TXT records??? In 2025, those are essential for a variety of reasons. So, we can’t do that.

OK… then I guess our only alternative is to… ugh… move the name servers over to Hostinger. That is obviously what Hostinger wants us to do. That’s what hosting companies always want you to do, and the main reason I don’t want to do it. It’s a lot more of a pain to switch hosting companies when your current host is handling your DNS. It’s not truly lock-in, but it might as well be.

Anyway, that’s where we are. No one back in the late ’90s and early 2000s planned for the 2025 domain registrar zombie apocalypse.

And now, I’m just sitting here, waiting for the name server change to propagate, so I can actually edit the client’s zone file in Hostinger. This is the next-worst thing I can say about Hostinger: if you host domains, let them edit the DNS zone file even before they’re using your name servers! It would be so, so nice if I could plug in all of the client’s MX records while I’m waiting for propagation. As it is, there’s going to be some indeterminate amount of time when their email absolutely will stop working, between when the name server change propagates, making Hostinger allow me to actually edit the damn zone file, and when I actually get those records plugged in and they propagate.

Ideally, this should only take a few minutes. But name server changes can take anywhere from a few minutes to a day or more to propagate. Do I just need to sit here on the edge of my seat that entire time?

I guess so.

Well, that has me all caught up on this stupid situation. I don’t have much else to say about it… I just have to wait. But all of this just feels like another nail in the coffin of this stupid industry that never planned ahead and also never seems to learn from past mistakes.